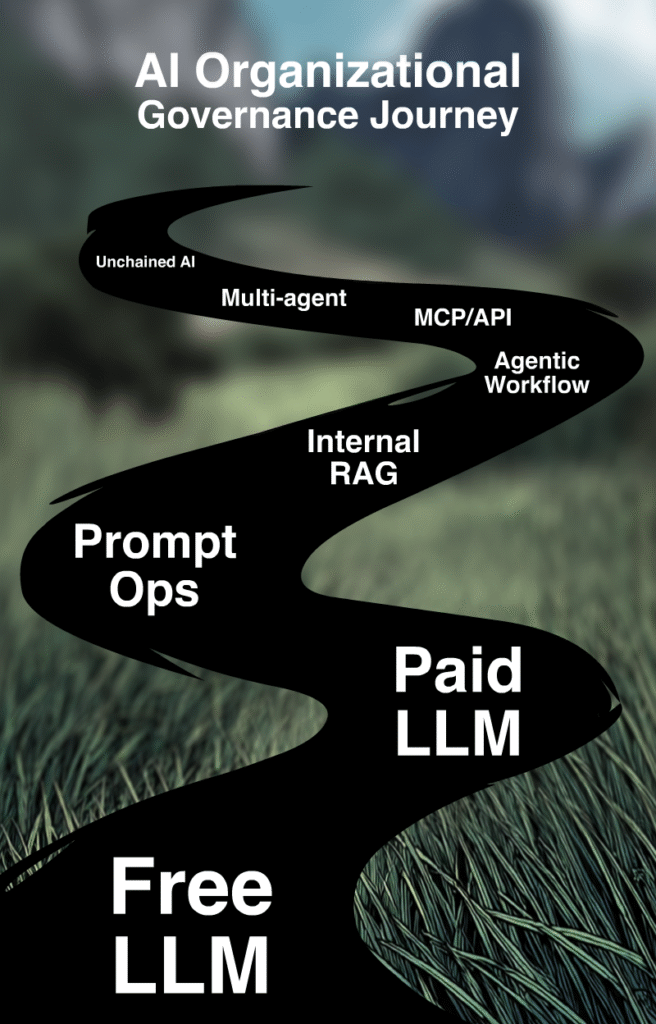

Most companies don’t “go AI” overnight. They inch forward, painfully, one process or team at a time. What does the technical journey look like, and what practical use cases open with each phase? Join us on a road trip, from denial to a disciplined, AI-Powered organization. Understand when to build governance, operationalize prompts, and where Agents and multi-Agent systems (“swarms”) fit in. Finally, build your own “unchained” Small Language Model at home to make AI work for you.

Fiona Passantino, AI Leadership

From Denial to Discipline

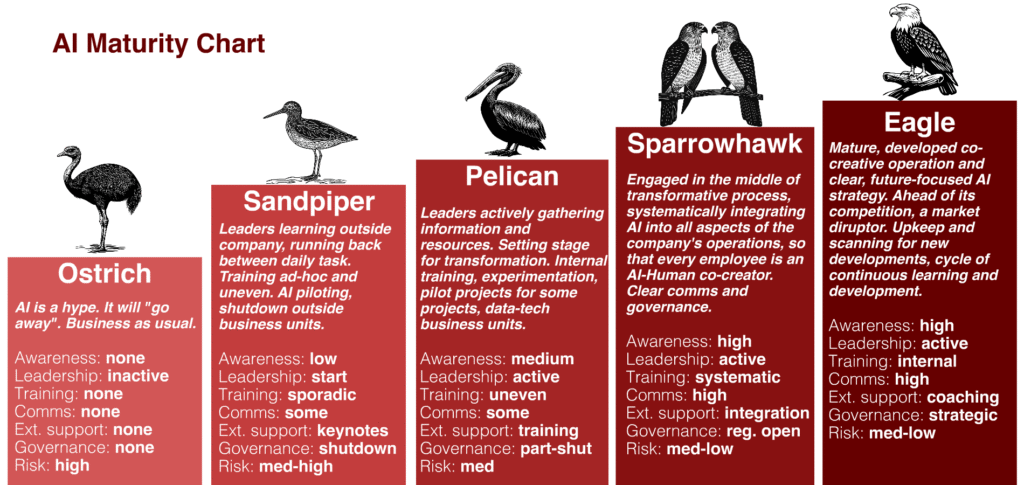

Most organizations start their AI Integration journey like ostriches, heads down in the sand. Hoping the AI hype goes away, so that everyone can stop making cat videos and get back to work.

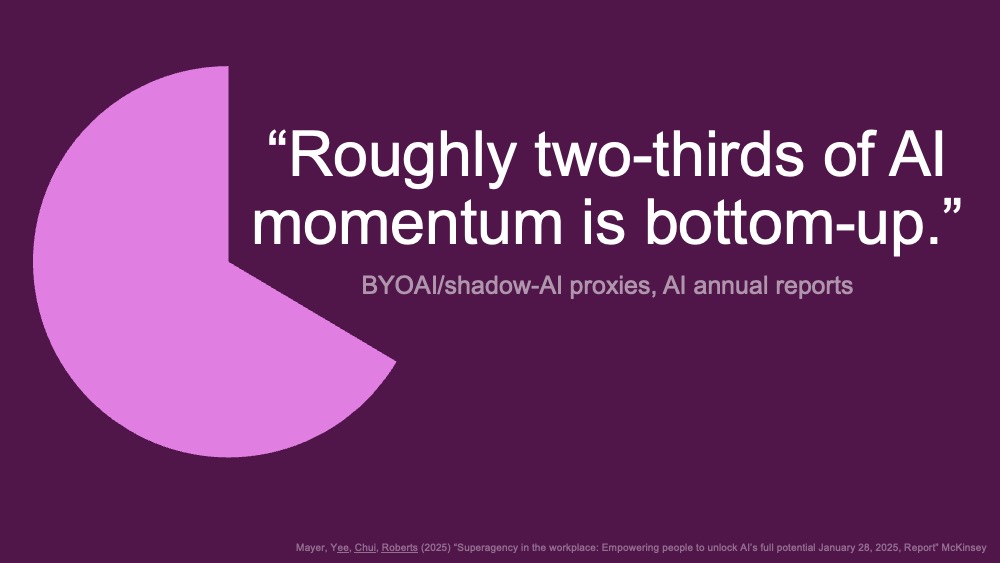

Once the people who do the work realize that AI can execute brilliantly on the more awful parts of their jobs, AI finds its way into the workflow, whether leaders liked it or not. Roughly two-thirds of AI momentum is bottom-up; the workforce adopts AI and leaders follow with governance, training and budget for premium solutions.[i]

Mostly because they must.

Now sold on the promise of AI, organizations will become Sandpipers; a few brave Early Adopters venture out, timidly, to learn new tools and tricks, only to come running back before the tide pulls them out to sea. One small solution here, a bit of optional company-sponsored training there. But no organizational integration in a structured, systematic way.

The Sandpipers become Pelicans; no longer timid, actively scooping up mouthfuls of information to share with the team. By now, the entire organization has some rules of the road, premium models to work with, and pilots in progress.

The Sparrowhawk hunts in tandem: one flies high to spot the prey and signals to the low-flier, who swoops in for the kill. Together, they are a formidable team. The Sparrowhawk organization has personal AI assistants for every worker, making each a AI – Human brain.

Finally, they fly like Eagles, scanning the horizon for new developments, new models and new modes, confident that AI is here to stay just like the internet, the smartphone and social media.

The Tech Break-Down

What do these phases look like in terms of the tech, and processes?

Phase 1: Free LLM

Your organization is experimenting with free frontier AI models for low-risk tasks and discovering prompt patterns. You are attempting to learn the landscape, switching from Gemini to ChatGPT and Copilot and back again; a method known as “Model Surfing”.

In the absence of governance, there will be plenty of “Secret Cyborgs” (workers who use AI quietly, from home computers, and call the work their own). Leaders work on setting up governance, data rules, and constrain all AI pilots to non-sensitive data areas. Set parameters, such as no client data, no HR, health, finance, strategic information and establish transparency guidelines for basic processes.

Here are a few use cases.

- Sales: read and qualify leads, draft a 3-line reply with cleaned prompts, follow up.

- Support: classify ticket intent, suggest reply from policy, list steps to resolve.

- HR: write job description, create onboarding materials, summarize interview notes, coaching outlines for internal conflict resolution.

- Finance: business intelligence from (cleaned) databases, drafting payment reminder, composing vision documents.

- Ops: writing out standard operating procedures, creating pre-flight checklists, generating acceptance criteria.

- Marketing: boiling down long documents or articles into 150-word posts, creating regionalization, translation, SEO keywords.

Phase 2: Paid LLM

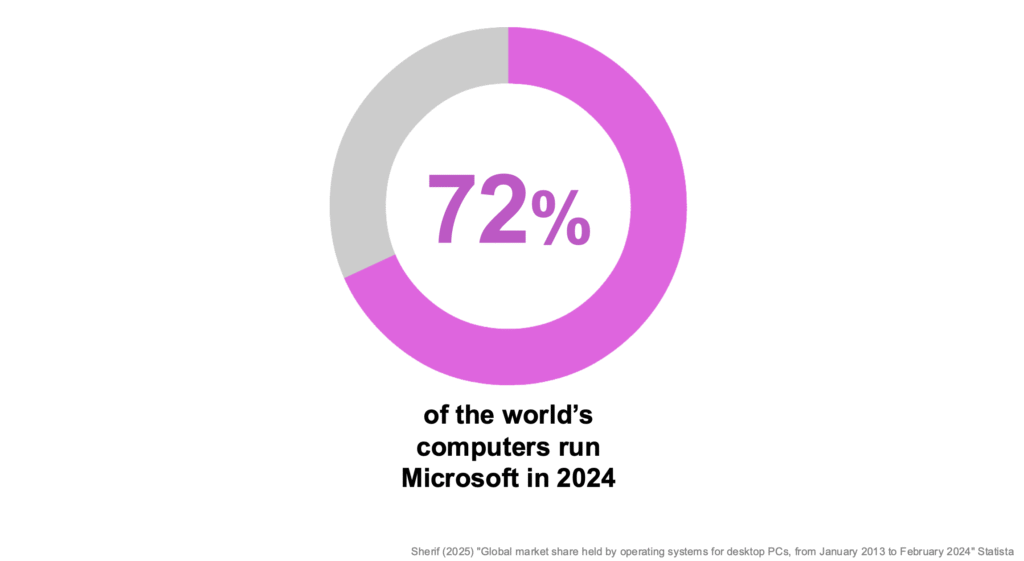

It will eventually be clear which models work for the organization; it’s time to invest in a favorite. Most of the time, this is Microsoft Copilot. After all, 72% of the world’s organizations run Microsoft, so this feels like a low-risk, obvious choice[ii].

Good integration requires a bit of work for the leader. There is the need to educate leaders about admin controls, so that employees can log in with Single Sign-On. A Data Processing Agreement needs to be set up: this is a GDPR-required contract between the leader of the organization and the AI vendor that spells out what data is swept up in an off-site data center, for what purpose, how it’s stored, how it’s retained, how breach notices are communicated and how data-subject requests are handled. An Analytics Dashboards will tell you who’s using AI, how often, and at what speeds (“latency”), as well as success and failure rates for top prompts.

A wise leader will negotiate tokens processed and not number of Humans using the tools. This puts a few guardrails in place in terms of cost and will cover the team as their usage habits shift. Some will be power-users while others will only drift into their AI panels when attending mandatory training.

In the end, it doesn’t matter which LLM you choose. It’s how AI is used, and for which cases. One good hammer with comprehensive training is far more effective than a toolbox of fancy, expensive devices that no one understands.

Phase 3: PromptOps

Once commercial AI becomes part of your workflow, it’s time to operationalize how it’s used. “PromptOps” means treating prompts like code; as a company asset which is versioned, tested, governed, and shipped (internally) on a release cycle. Think of it like DevOps for prompts.

What does this mean? It means creating a reusable, central, accessible library of prompt templates that work for specific purposes. If everyone is prompting their models in a vacuum, this prevents a cycle of constant wheel re-invention. This also allows leaders to implement policies that enforce ethical, legal, and regulatory compliance in prompts, data privacy regulations or organizational standards. PromptOps allow you to adapt to changes more quickly and adopt standards for the organization.

Phase 4: Internal RAG

Time to turn inward and use AI to search and generate off internal data. A “RAG” AI (Retrieval Augmented Generation) means that you set up a basic chatbot that is trained on all your own information, which allows your team to get the right information faster. Something like a little Google inside your company. AI helps write the reply and shows where it came from.

The key difference between RAG AI and simple search is that bot-search doesn’t only find the right documents, it understands intent with each query, reads the right passages in the data lake, drafts the answer in the correct tone of the company, and shows the source links so it can be trusted.

System connectors are important here. This is the pipeline that connects your people to your information. Your data might reside on SharePoint, Google Drive, Slack, CRM, ERP, Confluence or on a high-powered server in the basement. The RAG, at this stage, should be internal-only, and not facing external clients.

Phase 5: Agentic Workflow

AI Agents, once they are set up to carry out actions with your tools and functions, now cross the boundary from using AI as a tool to incorporating it as a kind of digital colleague. An AI Agent is a specifically trained version of a running model that can: look things up, pull data from systems, carry out simple updates, tool and function call, and ping a Human when it’s unsure how to proceed.

This is not a robot army, but an intelligent system with a job to do that follows a checklist and executes. It closes small loops end-to-end and reports when done.

An agentic workflow might look like this:

Read request > Find info > Draft automated reply > Update the system > Update the CRM

Here are a few examples of a simple first loop support:

- A customer email comes in asking for a change to a product subscription.

- Agent gathers intent and classifies it.

- Agent searches help center documentation for context.

- Agent uses the CRM to identify the customer.

- Agent makes changes to the billing, following a checklist.

- Agent drafts a reply to the customer.

- Agent updates the ticket status.

- Agent asks a Human for help if the request is more complex.

Phase 6: MCP/API

An MCP (Model Context Protocol or Master Control Program) is a way of controlling Agents in a multi-step process. It’s an instruction manual or playbook that lays a system of fixed rules above the existing training; explaining how to think, behave, and respond in a specific situation. MCPs help Agentic models work in a way that’s informed, on-brand and consistent.

Here are a few concrete cases to consider.

- The MCP defines the menu of tools an Agent is allowed to use: “read knowledge base,” “create Zendesk ticket,” “update CRM note,” of “schedule meeting.”

- The MCP defines task owners, permissions, limits how often and how fast an Agent can operate, and which changes it’s allowed to make.

- When an Agent runs a task like “reschedule a demo and email the customer”, it calls only the scheduling tools and step by step checklist defined by the MCP.

If a forbidden action is attempted, this signals the MCP, which also has a listening function. It is set up to ping the responsible Human-in-the-Loop.

Phase 7: Multi-agent Systems (“swarms”)

A team of AI Agents that work together, either in sequence or in parallel, is an “AI Swarm”: one plans, one does, one checks. Swarms are useful for larger tasks that touch multiple tools and processes, that also require another set of eyes to check the work before release. See AI Swarms for more detail.

An example of this workflow might look like this:

Agent 1: conducts customer support

Agent 2 controls for policy adherence

Agent 3 issues the refund

Agent 4: triggers the ticket close

Phase 8: Unchained AI

When it comes to enjoying a cappuccino (a basic office supply), we generally have two choices: go to your nearest café or get yourself a fancy machine and make it at home. When it comes to AI, the Eagle organization will be building their own in the house.

How does this work, and where does one start?

Setting up a Small Language Model (SLM) in-house involves running a compressed, high-performance model on your own hardware, such as a laptop or server. Finding a version on an open source website (such as Hugging Face or Studio LM) and installing it on a solid computer on your premises, that potentially has access to your data.

Open Source models are typically “quantized,” meaning they are reduced from larger, more complex numbers (16/32-bit) to smaller formats (8-bit, 4-bit), allowing them to fit on less expensive hardware while still delivering fast, reliable results. Different configurations meet different business needs. A Q8 offers the highest quality but requires more resources and internal talent to maintain it. A Q5 strikes a balance between performance and cost, while a Q4 is the fastest and lowest quality.

One installed on a test machine, try out a few models locally, experimenting with prompts to find the one that works best in your environment. Beware of the “unchained” varieties, that have no guardrails – they will engage with you on (literally) any subject, can use intense language and make questionable recommendations.

Download and install your model, copying the weights (usually in formats like gguf for Llama.cpp or safetensors for vLLM/Transformers) into your server’s storage. The closer this model resides to your data, the safer. These file formats bundle everything you need for the model to function efficiently, including tokenizer, and metadata, allowing for rapid loading and minimal latency.

Next, you will need a coder and trainer to work together to train the model on your documents. Your Unchained model should be able to perform on specific, narrow tasks that require speed and privacy.

These small systems are good for tagging and routing emails and tickets, automatically categorizing them based on their content, and routing them to the right department or team. This saves time, ensures quick responses, and allows the first step to happen on a 24/7 timetable. It can also perform automated redaction of personally identifiable information (PII) or fill out forms based on documents, reducing manual work and maintaining privacy standards.

Diving In

No matter where you are in your journey, the invitation here is to always aim higher than your current experiment. Set guardrails, pick a primary model, and teach your Humans to use it. Training is always an essential part of organizational AI integration. Move from MCPs to Agents to Swarms with intent, and to fix pain points rather than to “do AI”; you’ll move from scattered pilots to a durable capability, enabling faster decisions, cleaner processes, and teams that do more of what they love. Commit to one meaningful upgrade per quarter; name owners, fund it, review it. The organizations that grow in their knowledge and use will define the coming decade.

Need help with AI Integration?

Reach out to me for advice – I have a few nice tricks up my sleeve to help guide you on your way, as well as a few “insiders’ links” I can share to get you that free trial version you need to get started.

No eyeballs to read or watch? Just listen.

Working Humans is a bi-monthly podcast focusing on the AI and Human connection at work. Available on Apple and Spotify.

About Fiona Passantino

Fiona helps empower working Humans with AI integration, leadership and communication. Maximizing connection, engagement and creativity for more joy and inspiration into the workplace. A passionate keynote speaker, trainer, facilitator and coach, she is a prolific content producer, host of the podcast “Working Humans” and award-winning author of the “Comic Books for Executives” series. Her latest book is “The AI-Powered Professional”.

[i] Mayer, Yee, Chui, Roberts (2025) “Superagency in the workplace: Empowering people to unlock AI’s full potential January 28, 2025 Report” McKinsey

[ii] Maldonado (2024) “CrowdStrike and Microsoft: What can we learn about what no one is willing to question?” Heinrich Boll Stiftung. https://th.boell.org/en/2024/08/14/crowdstrike-microsoft