AI-Powered Leadership

Fiona Passantino, Early March 2024

Designed to make sure Artificial Intelligence (AI) is used safely and ethically across Europe, the EU AI Act like a rulebook for AI development and use. AI is a powerful tool, but it can also be risky. What’s the EU Act all about, and what do you need to know? The basics in plain Human language.

What’s the EU AI Act?

On March 13, European lawmakers officially passed the Artificial Intelligence Act By far the strongest of its kind, this groundbreaking law defines and regulates the development and use of Artificial Intelligence (AI) systems for the private and public sectors[i]. The purpose is to ensure that AI is built and integrated into our systems in a way that protects our rights and safety, while still allowing for innovation and advancement as we move into the next phases of AI development.

So far, this law has all affected parties complaining in equal measure. This is generally a sign of some degree of fairness. As a Human user, it outlines a number of basic rights, which we also see with other EU agreements when we travel, use the internet and enter into cross-border contract agreements.

Ever since we have been sending out our first prompts, the European Parliament has been concerned about AI hallucinations; its tendency to produce factual errors and plain-old make stuff up, and our Human tendency towards the viral dissemination of deepfakes and other manipulation of reality[ii]. Left unchecked, this can mislead us Humans in all sorts of ways, from swaying elections to exposure to liability, character defamation, impersonation, identity theft and more[iii].

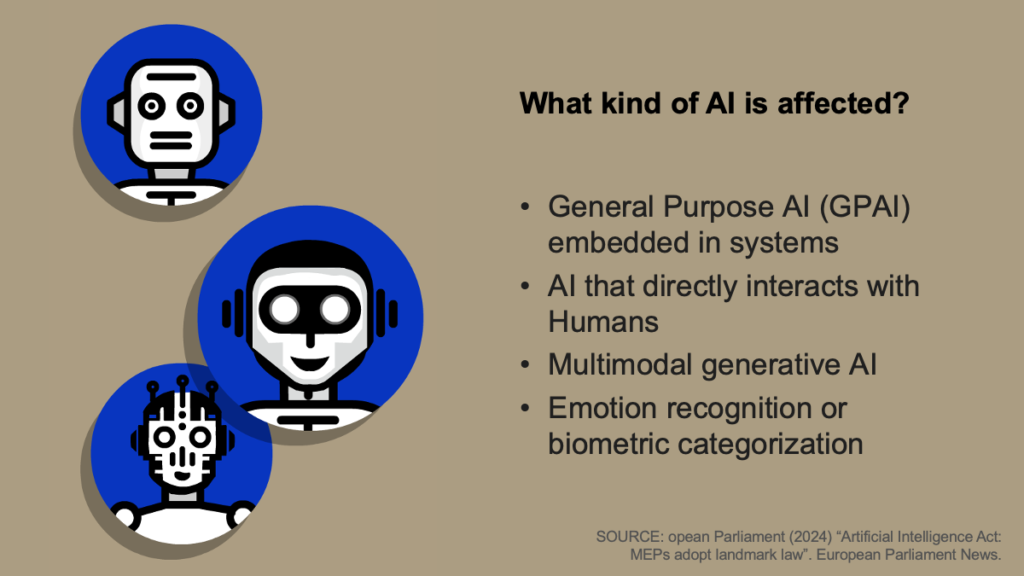

What kind of AI is affected?

Your friendly text generative AI – ChatGPT, Microsoft Co-Pilot, Google Gemini or Claude – are mostly safe. This act goes for General Purpose AI (GPAI) embedded in systems we use every day, sometimes without our even being aware.

The AI systems targeted by the Act are those that directly interact with Humans (AI agents) or those generating audio, image, video or musical content (Midjourney, DALL-E, etc.), those engaging in emotion recognition or biometric categorization (ShareArt) and those designed to create deep fakes (HeyGen, ElevenLabs)[i].

What are the different risk levels?

The law starts by categorizing AI into a series of risk categories. It issues outright bans on the highest risk types while regulating lower-risk types, and ends with a list of basic Human (user) rights going forward.

Banned AI

The AI Act defines a list of prohibited AI practices: those which use subliminal or purposefully manipulative techniques to materially distort the truth to influence Human behavior. This means AI that exploits Human vulnerabilities, carry risk of significant harm, including biometric categorization systems based on sensitive information.

Think of social scoring systems carried out by private companies, real-time remote biometric identification, untargeted scraping, emotion inference, predictive classification based solely on profiling or personality traits. Think about those giant databases of people’s faces pulled from CCTV footage without permission[i].

Imagine a car with AI-supported systems. The car records your driving patterns and maps it alongside personal characteristics and personal data which results in your paying a higher insurance premium than another driver, even if neither one of you has ever had an accident.

There are exceptions; when these systems are used by law enforcement to search for trafficking victims or to prevent terrorist attacks. Emotion inference can make medical procedures more tolerable. Used correctly, AI-driven biometric categorization and facial recognition cross-checked with sensitive information can benefit our health and safety.

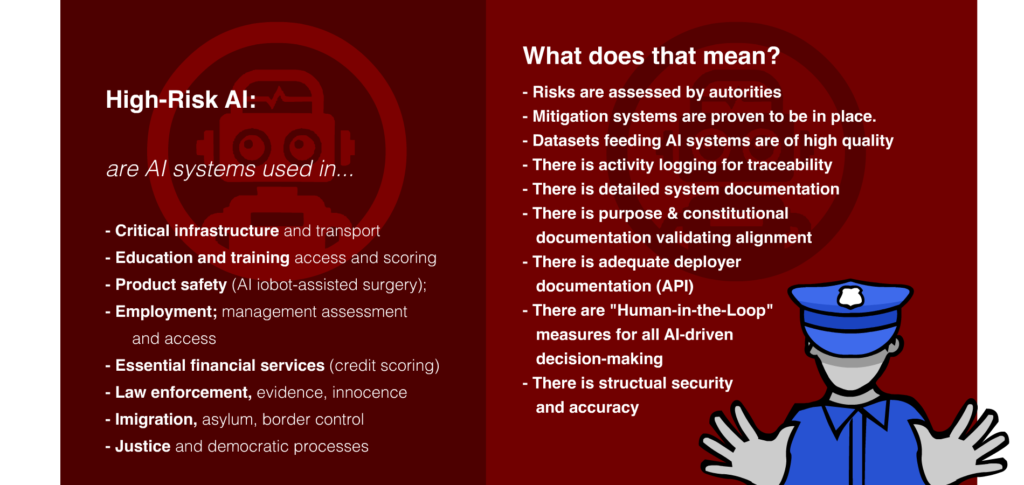

High-Risk AI: This category addresses AI systems used in critical tasks: transportation, education or law enforcement. Think of AI used for aviation guidance, vehicle security, toys, lifts, buildings, security, pressure tools and personal protective equipment. There is also AI for biometric identification, components within critical infrastructure, education, employment, credit scoring, law enforcement, migration and the democratic process.

Whether these applications are private or public, OEM or proprietary, they need to go through extra fairness and safety checks and given a badge of compliance before entering the marketplace. High-risk AI systems must meet strict requirements to ensure that their AI systems are trustworthy, transparent and accountable; they must conduct risk assessments, use high-quality data, document their technical and ethical choices, keep performance records, inform users about the nature and purpose of their systems, enable human oversight and intervention, and ensure accuracy, robustness and cybersecurity.

Finally, these providers must test their systems for conformity before adoption and register them in a publicly accessible EU database.

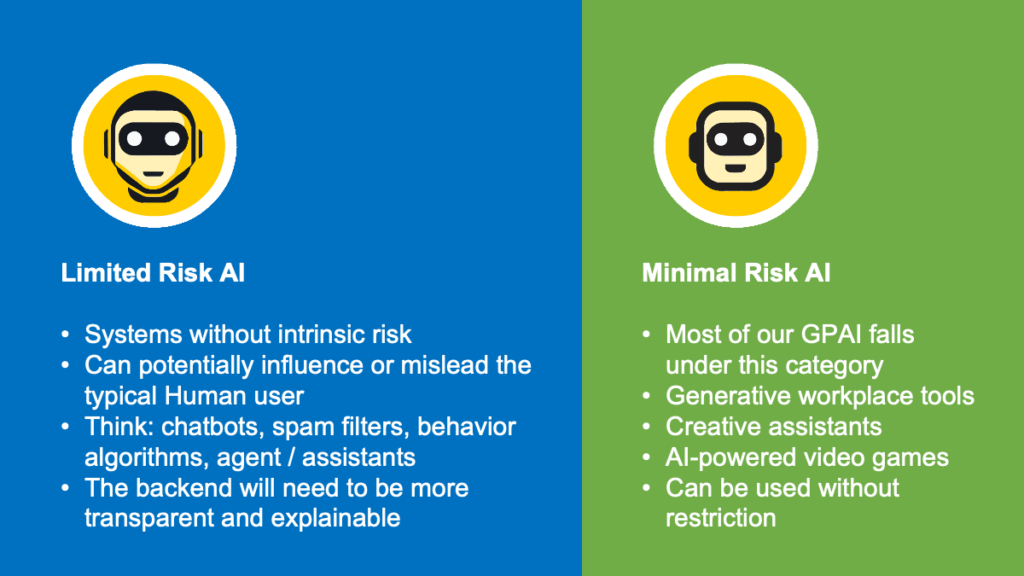

Limited Risk AI: These systems do not bear intrinsic risk but can potentially influence or mislead the typical Human user. This includes chatbots, spam filters, behavior algorithms and assistants. This backend will need to be more transparent and explainable.

Minimal Risk AI: Most of our GPAI falls under this category; these tools are our generative workplace tools, creative assistants and AI-powered video games. In general, these systems can be used without restriction.

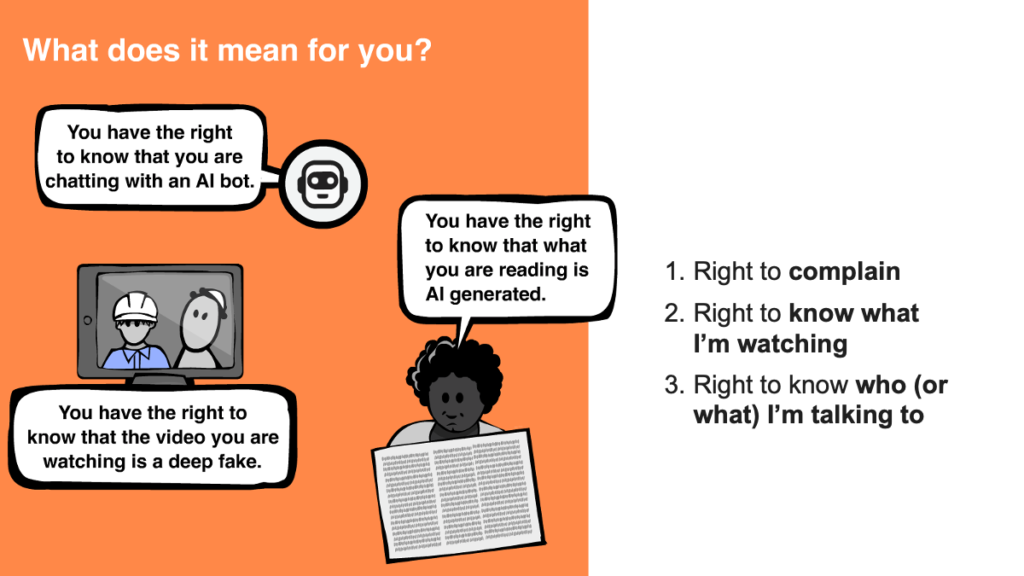

What are my rights as a Human?

The nice thing about this Act is that we, Humans, now get to enjoy certain rights baked into our AI experience.

- Right to complain

Humans have the right to hold the system suppliers accountable about decisions made that affect them, and get an explanation. Imagine you are applying for a job and are turned down; you suspect the AI software filtering your application out is using a discriminatory algorithm. Under this Act you would have the right to petition the company for an explanation of the decision-making process. Businesses that use high-risk AI need to be transparent and make sure their systems are accurate.

- Right to know what I’m watching

Humans have the right to know that the cat videos they are seeing on YouTube or Instagram are real or not. Fake content will need clear labels; a watermark, disclaimer or visible tag.

- Right to know who I’m talking to

Humans have the right to know that the friendly person at the other end of a service line is another Human or an AI-powered bot. As these tools get more and more adept at their use of language, ever more Turing-test capable, these labels will become ever more necessary.

How will this work in practice?

Once AI systems are out in the world, there will be checks to make sure they’re safe and compliant. This will involve things like monitoring by authorities, reporting of problems and spot checks by authorities.

The deployer will need to show “Human-in-the-Loop” oversight to the extent possible, and to monitor the input data for biases. It must keep the automated logs for at least six months[i].

The Act gives market surveillance authorities the power to enforce the rules, investigate complaints, and impose penalties for non-compliance. These can be stiff; engaging “banned AI” can mean a fine of € 35 million or 7% of the total worldwide annual turnover, depending on the infringement, and € 15 million or 3% for the improper use for high-risk AI[ii].

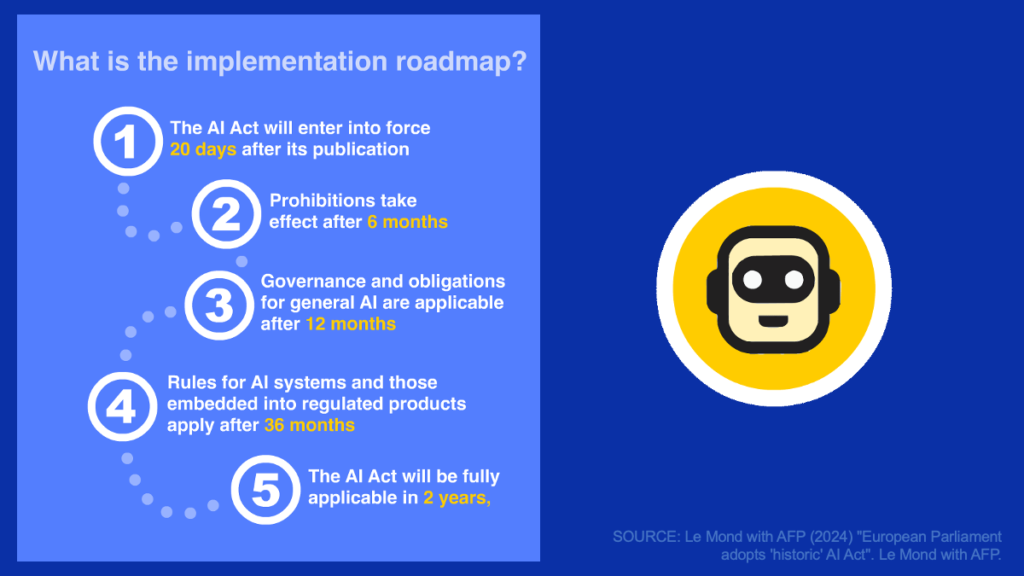

What’s the timeline?

The AI Act enters into force 20 days after publication in the EU Official Journal. After that, the following compliance timelines apply:

After 6 months: enforcement of “banned AI” products and practices.

After 12 months: obligations take effect for all future GPAI releases.

After 24 months: retroactive enforcement on models on the market before the Act came into law. The AI Act applies in full and most other obligations take effect.

After 36 months: obligations for all new-release high-risk systems for the private and public sector take effect.

After 48 months: retroactive obligations for high-risk AI systems by public authorities on the market before the AI Act will take effect.

How do I get help with system compliance?

EU-funded programs are being set up now to help businesses, especially small ones, develop and test new AI safely. The AI Office is designed to assist companies with compliance before the rules enter into force.

Need help with AI Integration?

Reach out to me for advice – I have a few nice tricks up my sleeve to help guide you on your way, as well as a few “insiders’ links” I can share to get you that free trial version you need to get started.

No eyeballs to read or watch? There’s a podcast for you!

- Listen to the APPLE PODCAST

- Listen to the SPOTIFY PODCAST

Search for the “Working Humans” podcast everywhere you like to listen. Twice a month, Fiona will dive into the nitty-gritty of employee engagement, communication, company culture and how AI is changing everything about how we work now and in the future. Subscribe so you never miss an episode. Rate, review and share.

About Fiona Passantino

Fiona is an AI Integration Specialist, coming at it from the Human approach; via Culture, Engagement and Communications. She is a frequent speaker, workshop facilitator and trainer.

Fiona helps leaders and teams engage, inspire and connect; empowered through our new technologies, to bring our best selves to work. She is a speaker, facilitator, trainer, executive coach, podcaster blogger, YouTuber and the author of the Comic Books for Executives series. Her next book, “AI-Powered”, is due for release in October 2023.

[i] European Commission (2024) “AI Act; Shaping Europe’s digital future” The European Commission DG Connect. Accessed March 19, 2024. https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai#:~:text=The%20AI%20Act%20is%20the,play%20a%20leading%20role%20globally.&text=The%20AI%20Act%20aims%20to,regarding%20specific%20uses%20of%20AI.

[ii] European Commission (2024) “AI Act; Shaping Europe’s digital future” The European Commission DG Connect. Accessed March 19, 2024. https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai#:~:text=The%20AI%20Act%20is%20the,play%20a%20leading%20role%20globally.&text=The%20AI%20Act%20aims%20to,regarding%20specific%20uses%20of%20AI.

[i] European Parliament (2024) “Artificial Intelligence Act: MEPs adopt landmark law”. European Parliament News. Accessed March 19, 2024. https://www.europarl.europa.eu/news/en/press-room/20240308IPR19015/artificial-intelligence-act-meps-adopt-landmark-law

[i] Le Mond with AFP (2024) “European Parliament adopts ‘historic’ AI Act”. Le Mond with AFP. Accessed MArch 19, 2024. https://www.lemonde.fr/en/european-union/article/2024/03/13/european-parliament-adopts-historic-ai-act_6615022_156.html

[i] European Parliament (2024) “Artificial Intelligence Act: MEPs adopt landmark law”. European Parliament News. Accessed March 19, 2024. https://www.europarl.europa.eu/news/en/press-room/20240308IPR19015/artificial-intelligence-act-meps-adopt-landmark-law

[ii] Stibbe (2023) “The EU Artificial Intelligence Act: our 16 key takeaways” Stibbe Publications and Expertise. Accessed March 19, 2024. https://www.stibbe.com/publications-and-insights/the-eu-artificial-intelligence-act-our-16-key-takeaways

[iii] EuroNews.Next (2024) “EU AI Act reaction: Tech experts say the world’s first AI law is ‘historic’ but ‘bittersweet'” EuroNews.Next. Accessed March 19, 2024. https://www.euronews.com/next/2024/03/16/eu-ai-act-reaction-tech-experts-say-the-worlds-first-ai-law-is-historic-but-bittersweet#:~:text=The%20threat%20of%20AI%20monopolies,open%20source%20companies%20like%20Mistral.