AI-Powered Leadership

Fiona Passantino, late April, 2024

AI software is advancing at lightning speed. But what about the hardware? Moore’s Law states that the number of transistors on a microchip doubles every two years. This drives computing power. But there are physical limitations of how small transistors can be. Introducing Quantum Computing and the fascinating Qbit; a system that leverages the principles of quantum mechanics to perform calculations much faster than classical computers. The wild world of the very, very small and its implications for AI into the future.

The End of Moore’s Law

Our world is run by massive supercomputers, pumping raw compute with incredible speeds, powered by the Nvidia H100 GPU (Graphical Processing Unit), the Google TPU (Tensor Processing Unit) or Intel chip. Faster and faster they go, running on smaller and smaller silicon chips; every year, more transistors fitting onto an ever-tinier wafer of silicon.

Moore’s Law states that computational power doubles every 18 months[i]. This held true for more than 50 years, as the number of microscopic transistors squeezed onto a silicon chip have doubled. By now, IBM’s experimental chips are only slightly larger than the actual silicon atoms used to hold them[ii]. Intel’s latest version of the transistor chip measures only 32 nanometers across, printed onto an unimaginably thin wafer of silicon, barely a single row of atoms thick[iii].

As we push the boundaries of what the traditional transistor chip can bear, there may soon be a ceiling to Moore’s Law. Physics is getting in the way at last. Any thinner, and the chips themselves become unstable.

Going Non-Binary

Our supercomputer systems that hum in massive warehouses outside Silicon Valley, that govern advanced encryption and AI development, are starting to become limited by their own architecture. As chip size hits a wall, there is also the processing; even the most powerful machines speak to themselves and each other using a basic binary code token, or “bit”.

A bit can have two states: it is either on or off, “1” or “0”. When data is sent from one system to another, it sends a long string of “0”’s and “1”’s in a that, together, add up to represent a single pixel of color or a single letter in an alphabet.

This is 20th-century transistor technology, and it’s been this way since the first Turing machines cranked out punched paper tapes in the 1960’s[iv]. This simple “on-off” chain is the result of an old-fashioned electrical system which broke or held an electrical current to convey Morse Code style messaging; a breaker switch communicating a “0” or a “1” in long, data strings.

These systems have become incredibly fast today, whizzing along at the speed of thought, packing and unpacking long chains of code one bit at a time. But in the end, a classical transistor is still a binary system, based on linear strings of “0’s” and “1’s”, and moves from one computation to the next; eventually the oxide layer used to hold back the flow of electrons – the breaker switch – becomes too thin to do this reliably.

The amount of compute needed in the AI world is skyrocketing. With the internet of Things (IoT), generative AI, robotics, self-driving cars, 5G and 6G phones all billowing out tokens in need of processing, the very future of tech is at stake. There is the issue of sheer data volume and increasing data complexity; some problems cannot be solved with the computational power of even the best supercomputers. So, what comes next?

What’s smaller than a row of atoms on which to print a chip? The atoms themselves.

A Quantum particle is the smallest thing we Humans can see and work with. It’s a subatomic creature, represented by a single electron or photon. Quantum Mechanics governs the behavior of very small particles like electrons and photons. This is the language of nature, guiding processes like photosynthesis and genetics.

At this size, things become genuinely weird. Think of a whizzing system of electrons around a nucleus; they change states, live in multiple dimensions, exist in many places at the same time and act in tandem with one another in a baffling way we still do not completely understand. They are both a particle and a wave, and in an energetic state, we only know the probability of where they actually reside.

The Magical World of the Unimaginably Small

A Quantum computer is uses subatomic particle-waves called “Quantum Bits” or “Qubits”. Unlike a binary bit (the “0’s” and “1’s”), a Qubit can point to multiple states at once, which significantly increases the amount of information going from one system to another. This is like going from a 6-digit numeric password to one that is 500 characters long using every letter of the alphabet from every language on earth as well as every possible digit, symbol and accent known to man[v]. This is the magical property known as “superposition”.

Quantum computing is also able to perform many calculations at the same time, as opposed to the linear “brute force” approach of the binary transistor. This is known as “Parallelism”. This makes them much faster at solving certain types of problems, such as factoring large numbers or simulating encryption.

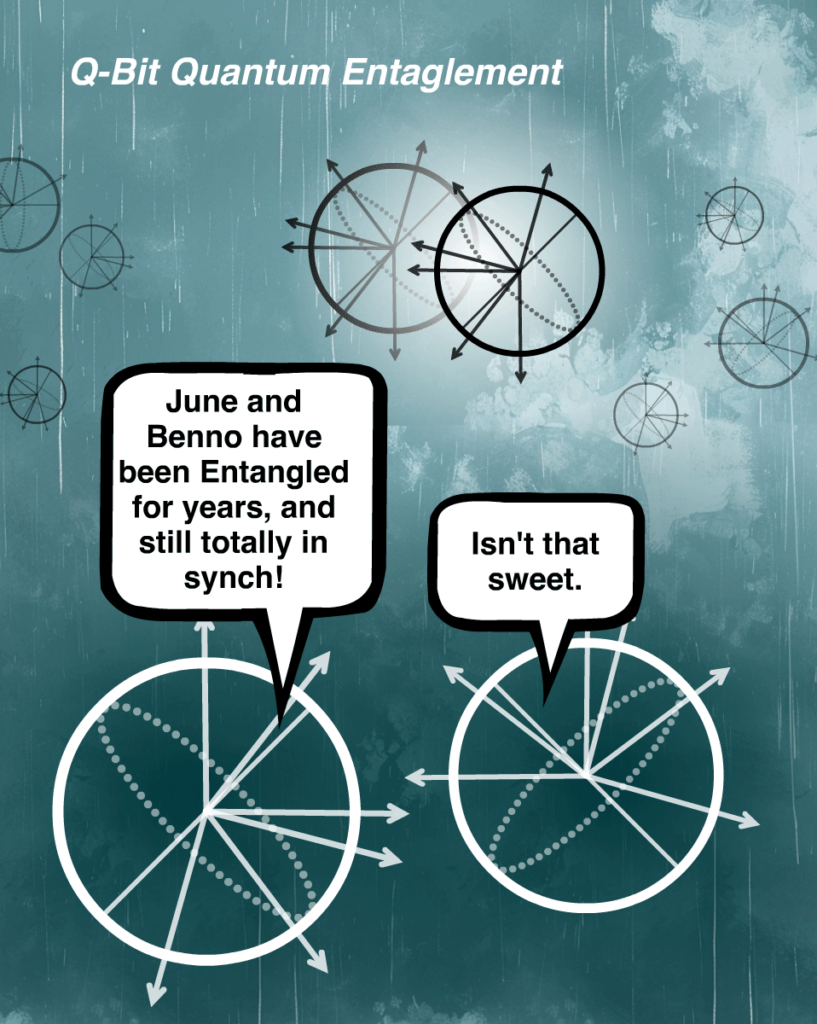

The strangest of all the Qubit’s mysterious powers is “entanglement”. Two Qubits existing in entirely different physical locations can become linked and mirror each other’s movements precisely, no matter how far apart they might be. Thus entangled, a Qubit in Greece can theoretically point to the same place at exactly the same time as its partner in Australia down to the nanosecond; the state of one changes the state of the other in exactly the same way[vi]. This means that, in theory, no information transfer between two entangled particles is needed, further speeding computational power over large distances.

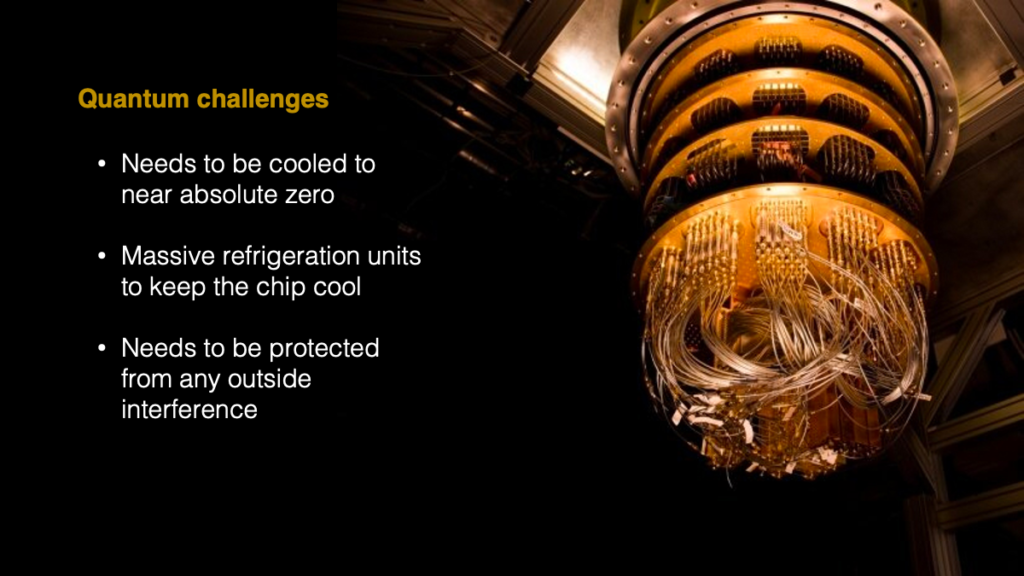

Quantum Computing is still an emerging field with significant technical and logistical challenges ahead of us. But it represents the future evolutionary jump for our processing power. It will be years before you see a Quantum chip in your laptop or smartphone, if ever, mostly because of the machinery needed to harness this technology.

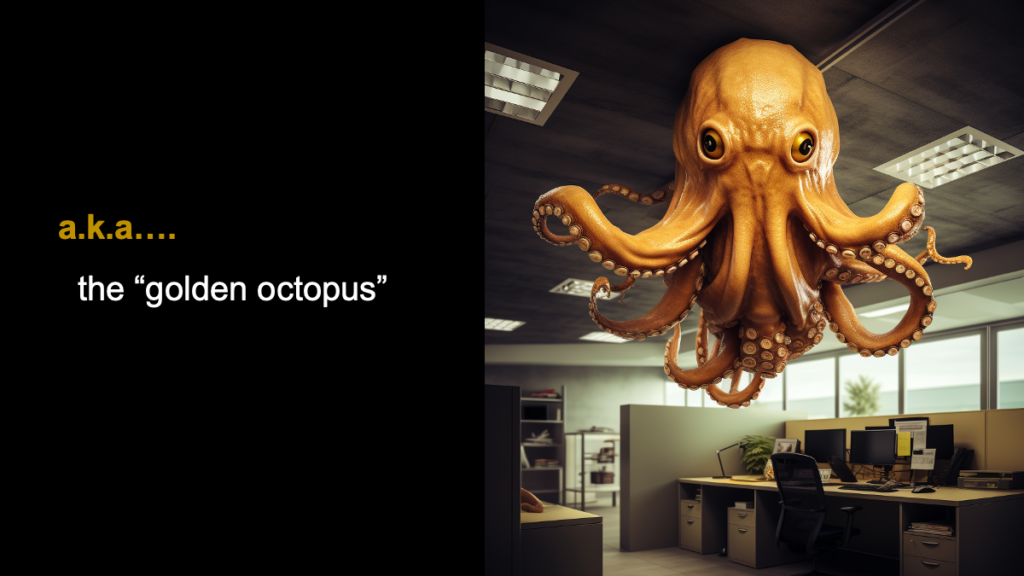

The Golden Octopus

A Quantum processor doesn’t look that different from the one you have in your laptop, but the hardware system around it is enormously complex. It’s about the size of a car, hanging from the ceiling, floating in an intricate system of shining tubes many of us call the “golden octopus”[vii]. The tubes are a robust cooling system to keep the superconducting processor ultra-cold; at near Absolute Zero, likely the coldest place in the known universe. This is necessary to slow the particles down to a state where they act as a close to solid mass, so they can be read and written reliably. The casing is also needed to stabilize the system, since any movement or outside interference transfers energy and thus disrupts the readings.

These obstacles are not stopping the big tech companies from investing heavily in this technology. How big is Quantum computing? Big enough to have attracted the attention of some of the world’s largest tech companies, including IBM, Microsoft, Google, D-Waves Systems, Alibaba, Nokia, Intel, Airbus, HP, Toshiba, Mitsubishi, SK Telecom, NEC, Raytheon, Lockheed Martin, Rigetti, Biogen, Volkswagen, and Amgen[viii]. According to Gartner, 40% of large companies plan to use this technology by 2025[ix].

It’s a race to commercialize this technology. Once it’s out there, this sort of computing power has enormous implications for so many aspects of our lives. Quantum computers have the potential to crack current encryption codes we use to secure banking transactions, government secrets and personal information. Blockchains are vulnerable to “hash break”, which is the chain that underpins the entire system; the chain becomes “mutable”, meaning it’s changed without record, and thus unusable.

The promise of Quantum modelling defies our imaginations. It will likely be able to test chemical reactions in a fraction of the time, which might lead us to cures for diseases such as cancer, Alzheimer’s or MS. Quantum-speed discovery of CO2 catalysts could help us achieve carbon neutrality and reverse the effects of global warming, develop super batteries, solar panels and desalinization[x].

What will this mean for AI? Already, AI is being used to train and finetune Quantum computers. But as these tools evolve, AI itself will be Quantum-powered, allowing our LLMs to become larger, faster, and able to process more data at higher speeds using less energy many thousands of times than today.

The Quantum revolution will herald a third wave in AI, which may mean reaching AGI even faster than before, for better or worse.

Need help with AI Integration?

Reach out to me for advice – I have a few nice tricks up my sleeve to help guide you on your way, as well as a few “insiders’ links” I can share to get you that free trial version you need to get started.

No eyeballs to read or watch? Just listen.

Quantum Computing

AI software is advancing at lightning speed. But what about the hardware? Moore’s Law states that the number of transistors on a microchip doubles every two years. This drives computing power. But there are physical limitations of how small transistors can be. Introducing Quantum Computing and the fascinating Qbit; a system that leverages the principles of quantum mechanics to perform calculations much faster than classical computers. The wild world of the very, very small and its implications for AI into the future.

About Fiona Passantino

Fiona is an AI Integration Specialist, coming at it from the Human approach; via Culture, Engagement and Communications. She is a frequent speaker, workshop facilitator and trainer.

Fiona helps leaders and teams engage, inspire and connect; empowered through our new technologies, to bring our best selves to work. She is a speaker, facilitator, trainer, executive coach, podcaster blogger, YouTuber and the author of the Comic Books for Executives series. Her next book, “AI-Powered”, is due for release soon.

[i] Carter (2018) “Silicon chips are reaching their limit” Tech Radar. Accessed January 7, 2024. https://www.techradar.com/news/silicon-chips-are-reaching-their-limit-heres-the-future

[ii] Dunhill (2023) “What Is Moore’s Law, And Is It Dead?” IFL Science. Accessed January 7, 2023. https://www.iflscience.com/what-is-moore-s-law-and-is-it-dead-67138

[iii] Strickland (2023) “How small can CPUs get?” How Stuff Works. Accessed September 27, 2023. https://computer.howstuffworks.com/small-cpu2.htm

[iv] Mullins (2012) “What is a Turing machine?” University of Cambridge. Accessed September 27, 2023. https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/turing-machine/one.html

[v] Frankenfield (2023) “Quantum Computing: Definition, How It’s Used, and Example” Investopedia. Accessed September 27, 2023. https://www.investopedia.com/terms/q/quantum-computing.asp

[vi] Frankenfield (2023) “Quantum Computing: Definition, How It’s Used, and Example” Investopedia. Accessed September 27, 2023. https://www.investopedia.com/terms/q/quantum-computing.asp

[vii] IBM (2023) “What is quantum computing?” IBM Quantum. Accessed September 27, 2023. https://www.ibm.com/topics/quantum-computing

[viii] Frankenfield (2023) “Quantum Computing: Definition, How It’s Used, and Example” Investopedia. Accessed September 27, 2023. https://www.investopedia.com/terms/q/quantum-computing.asp

[ix] Castellanos (2021) “Google Aims for Commercial-Grade Quantum Computer by 2029 Tech giant is one of many companies racing to build a business around the nascent technology” The Wall Street Journal. Accessed September 27, 2023. https://www.wsj.com/articles/google-aims-for-commercial-grade-quantum-computer-by-2029-11621359156

[x] Forbes Technology Council Expert Panel (2023) “15 Significant Ways Quantum Computing Could Soon Impact Society” Forbes Magazine. Accessed September 27, 2023 https://www.forbes.com/sites/forbestechcouncil/2023/04/18/15-significant-ways-quantum-computing-could-soon-impact-society/?sh=392cf80648bb